Image Details

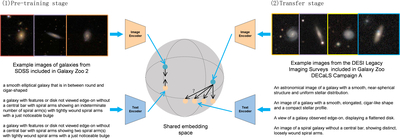

Caption: Figure 1.

Illustration of the two - stage training framework used in CLIP-GMC. In the first stage, using images of galaxies from SDSS included in Galaxy Zoo 2 and combining with text descriptions generated by decision trees, we train the image encoder and text encoder, and map both to the shared embedding space. In the second stage, a small amount of images of galaxies from DESI and corresponding category descriptions are used for transfer training, and then classification prediction is realized by means of the similarity between text and images.

Copyright and Terms & Conditions

© 2026. The Author(s). Published by the American Astronomical Society.